The Indian Ministry of Information Technology in a press release explained the reason behind the move that the ability to misuse AI tools created "to harm users, disseminate false information, manipulate elections or impersonate individuals has increased significantly".

To limit this, India has proposed a draft regulation requiring mandatory labeling of artificial intelligence content or AI-generated content on social networking platforms such as YouTube and Instagram. Social media platforms will be asked to let users declare whether the content being Uploaded is "synchronous information".

Companies like Meta and Google already have some forms of AI labeling on their platforms, and when they upload content, they will ask the creator whether the content is created with AI.

YouTube adds a label called adjusted or synthesized content to AI-generated videos, and also a description of how to create videos and can provide detailed information about the origin of the content as well as whether the content has been changed meaningfully with AI.

However, most of these measures, at this point, are still reactionary, meaning labels often appear after videos are submitted to the platform so that creators do not declare that the content is created with AI.

India's proposed amendments take the issue one step further, as companies will have to verify AI content on their platforms without necessarily being notified of them by implementing appropriate technological measures.

If they do not comply, social networking platforms will be subject to sanctions according to regulations.

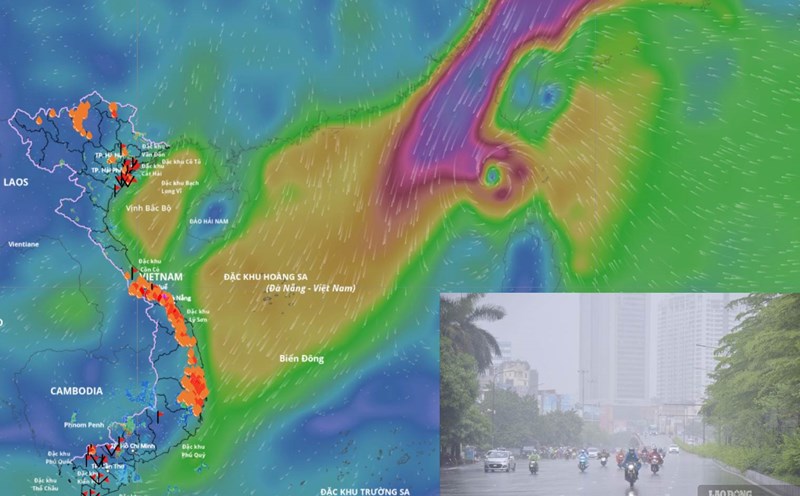

With more than 900 million Internet users and many ethnic and religious communities, it is understandable that the Indian government is taking strong action against regulations on AI and deepfake.